Waste separation is good for the environment. So is making our software more efficient and sustainable

. I don’t know if the .NET developers at Microsoft thought of that, but they kind of applied a similar concept in the design of their garbage collector regarding the separation of small from large objects. Good to know? Yes, because this internal behaviour has considerate performance implications to keep in mind when dealing with large amounts of memory, for example when deserializing big JSON files.

TL;DR:

For better application performance use appropriate Stream implementations when dealing with potentially large objects > 85.000 Bytes to prevent them landing on the Large Object Heap (LOH).

What does a Garbage Collector do?

Just like in real life, garbage collection does an indispensable job of keeping our places clean of all the trash we produce in the course of our lives. Most of the time, it does this more or less in the background without us noticing. Without it, we would eventually drown in all the leftovers of our own consumables. It’s basically the same with .NET applications. During their lifetime, they consume computer memory to cache objects and data to work with. However, this memory is limited and must be freed at some point after allocation. The .NET garbage collector (GC) as part of the Common Language Runtime (CLR) is exactly that piece of software responsible for managing our applications memory for us, so we don’t have to; in contrast to low-level languages like C.

Generation gap

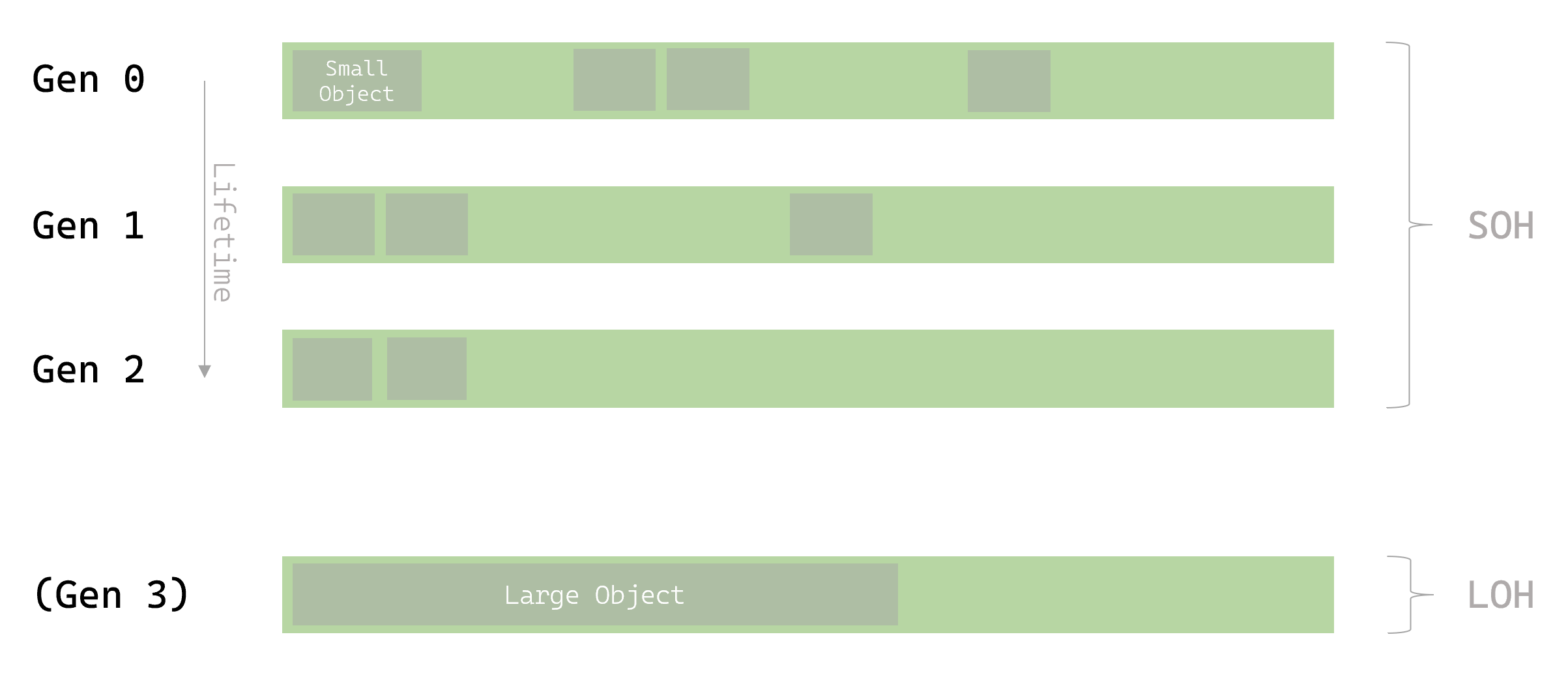

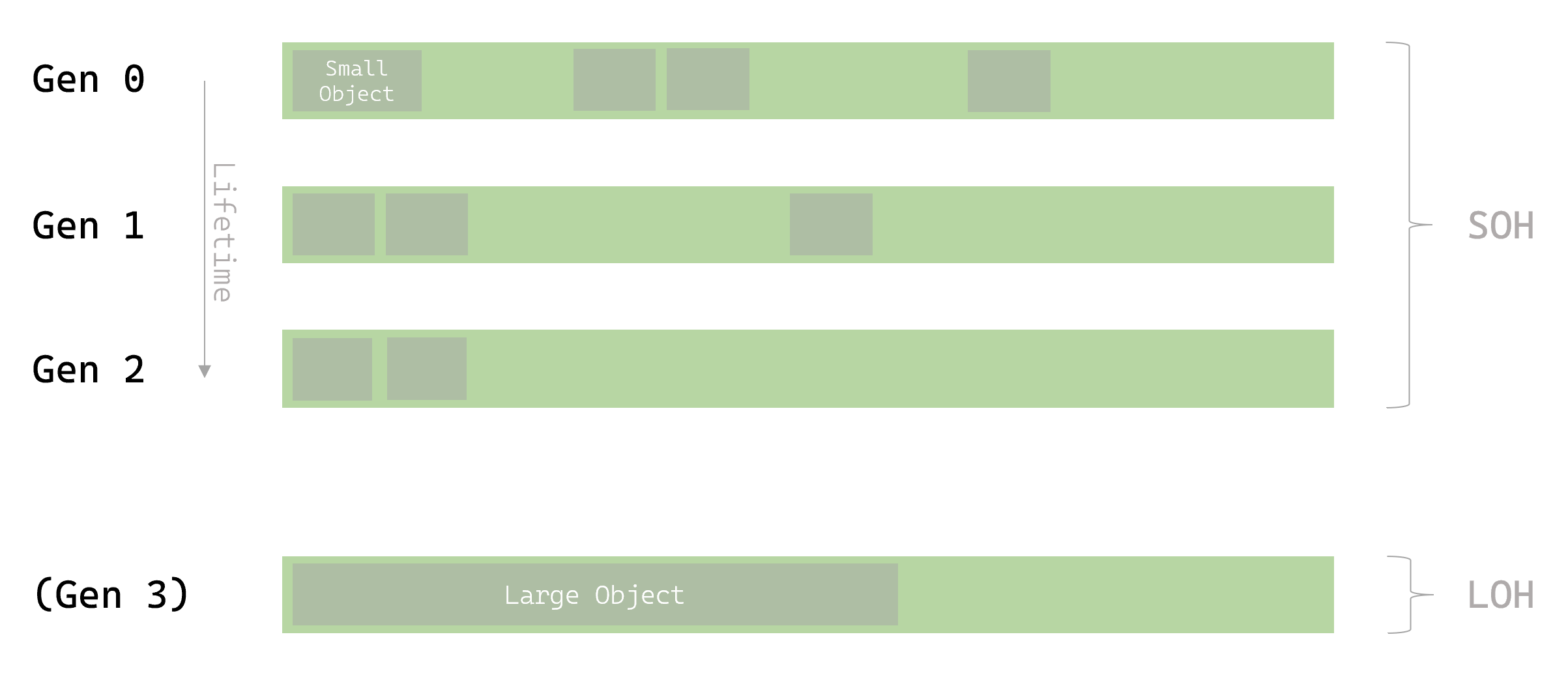

The .NET GC is a so called generational garbage collector. That means, it separates the memory it manages (the managed heap) into multiple generations which can be visualized as logical partitions. As memory is allocated and released, those partitions may become fragmented over time and have to be compacted. A cycle where memory is released and compacted is called a collection. For obvious reasons, it is more efficient to perform a collection over a generation than to walk over the whole managed heap. Considering also that newer objects have a statistically shorter lifetime than long-lived objects and have to be collected more frequently, the managed heap is divided into three generations (0, 1 and 2). Short-lived objects live in generation 0, while long-lived objects reside in or get promoted to generation 2 over time. Generation 1 serves as a buffer in-between. As lower generations are collected with every higher generation’s collection, too, a performance-wise expensive generation 2 collection is called a full garbage collection. These generations contain small objects only and therefore form the so called Small Object Heap (SOH). Large objects are treated a bit different and land on a special Large Object Heap (LOH).

Large waste

New objects live in generation 0. But there is an exception to that. If those new objects are very large - over 85.000 Bytes by default, to be precise - they deserve a special place in hell which is called the Large Object Heap (LOH) and is sometimes referred to as generation 3. The LOH is a physical generation which is logically collected upon full garbage collection with generation 2. As compaction is even more expensive here, the GC skips it completely for generation 3. Instead, fragmented dead objects are linked together on release forming a list of adjacent free space to be potentially reused at a later point in time on another large object alocation request.

There is an exceptionally well written section in the Microsoft Docs

on garbage collection in .NET where I acquired most of this knowledge from. I strongly recommend that article to the interested reader for more details on the topic.

Okay, so what are the major performance implications here?

First is allocation cost. When you request memory from the Runtime the CLR actually makes a guarantee that the allocated memory you get is cleared (all bits set to 0). This takes time. Even more so for large objects.

Second is collection cost. When you allocate a large object and exceed the LOH threshold, a full garbage collection is triggered. A full GC gets provoked especially often when allocating temporary large objects. Performance impact is highest in that scenario if generation 2 contains a lot of data to be collected.

Now, what can we as developers do about that when dealing with large objects? Streams to the rescue.

Garbage shredding

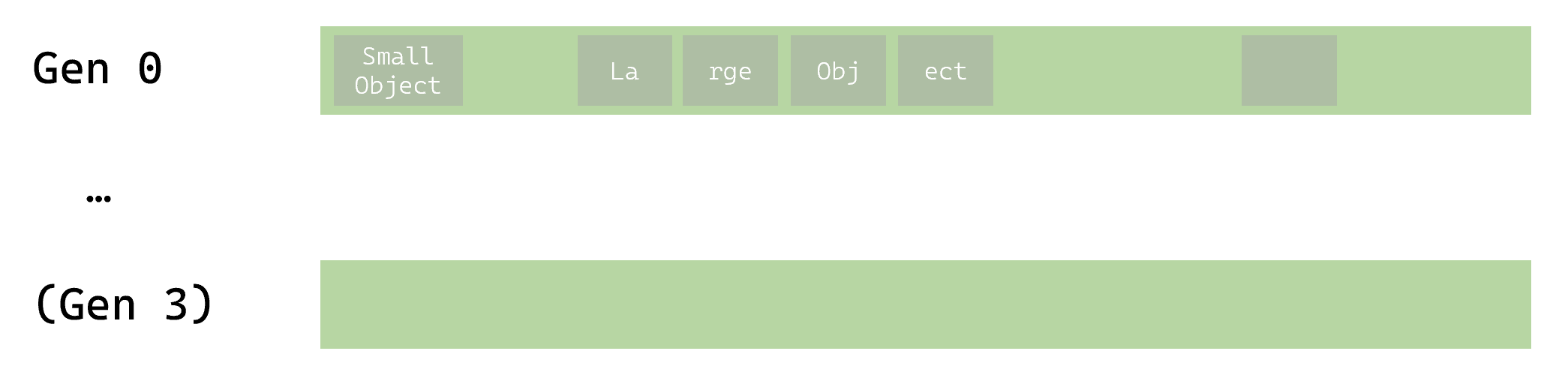

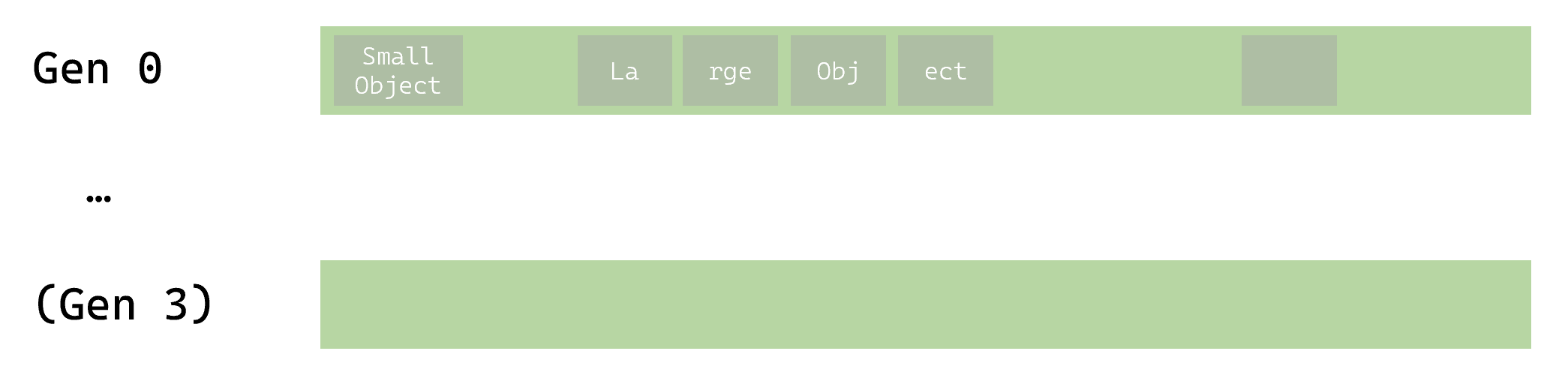

The best approach when dealing with large objects is to prevent them landing on the LOH in the first place by just dividing them into smaller chunks and handling only each chunk at a time. That is exactly what the most common implementations of the System.IO.Stream class will do for us.

You also cut your big cardboard boxes into pieces before throwing them out in the trash can, right? So, next time you handle that large JSON file - do not read the entire string into memory but deserialize from a Stream instead. See Newtonsoft performance tips

for a good example.

Making our software more efficient is one easy way for us developers to directly help the environment. If you’re interested in what else can be done to build more sustainable software I recommend to have a look at our other blogpost about Green IT

.